It is a really common requirement to add specific libraries to databricks. Libraries can be written in Python, Java, Scala, and R. You can upload Java, Scala, and Python libraries and point to external packages in PyPI, Maven, and CRAN repositories.

Libraries can be added in 3 scopes. Workspace, Notebook-scoped and cluster. I want to show you have easy it is to add (and search) for a library that you can add to the cluster, so that all notebooks attached to the cluster can leverage the library.

Within the Azure databricks portal – go to your cluster.

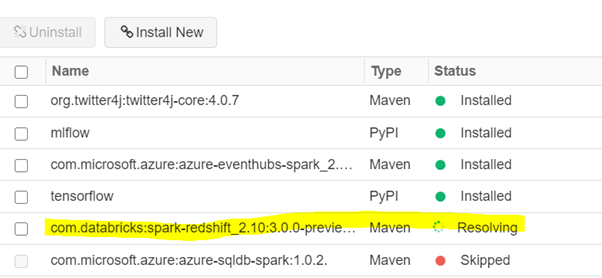

Then go to libraries > Install New.

As I mentioned at the start at the blog post, you can add many types, I use the built-in search to find the library I want.

You don’t have to “search” if you know the co-ordinates then you can go that route.

Click Install.

The states will be resolving > Installing > Installed.

Note – worth remembering this. When you install a library on a cluster and you have a notebook already attached to that cluster, it will not immediately see the new library. You must first detach and then reattach the notebook to the cluster.

Pingback: Azure Databricks – Adding Libraries from Blog Posts - SQLServerCentral - Actu Tech

Pingback: Adding Libraries in Databricks – Curated SQL